Alexander Martin

Ph.D. Student at Johns Hopkins University

I am a Ph.D. student at Johns Hopkins University, advised by Dr. Ben Van Durme. I am broadly interested in multimodal understanding and retrieval, especially towards advancing end-to-end content generation and reasoning. The core of my current research focuses on generating text that is grounded in retrieved documents and video. The north star goal of my research is to be able take a query from a user and render a Wikipedia-style like response with integrated multimodal information.

The core of my research focuses on generating Wikipedia-style like text that is grounded in both documents and videos. I have published on:

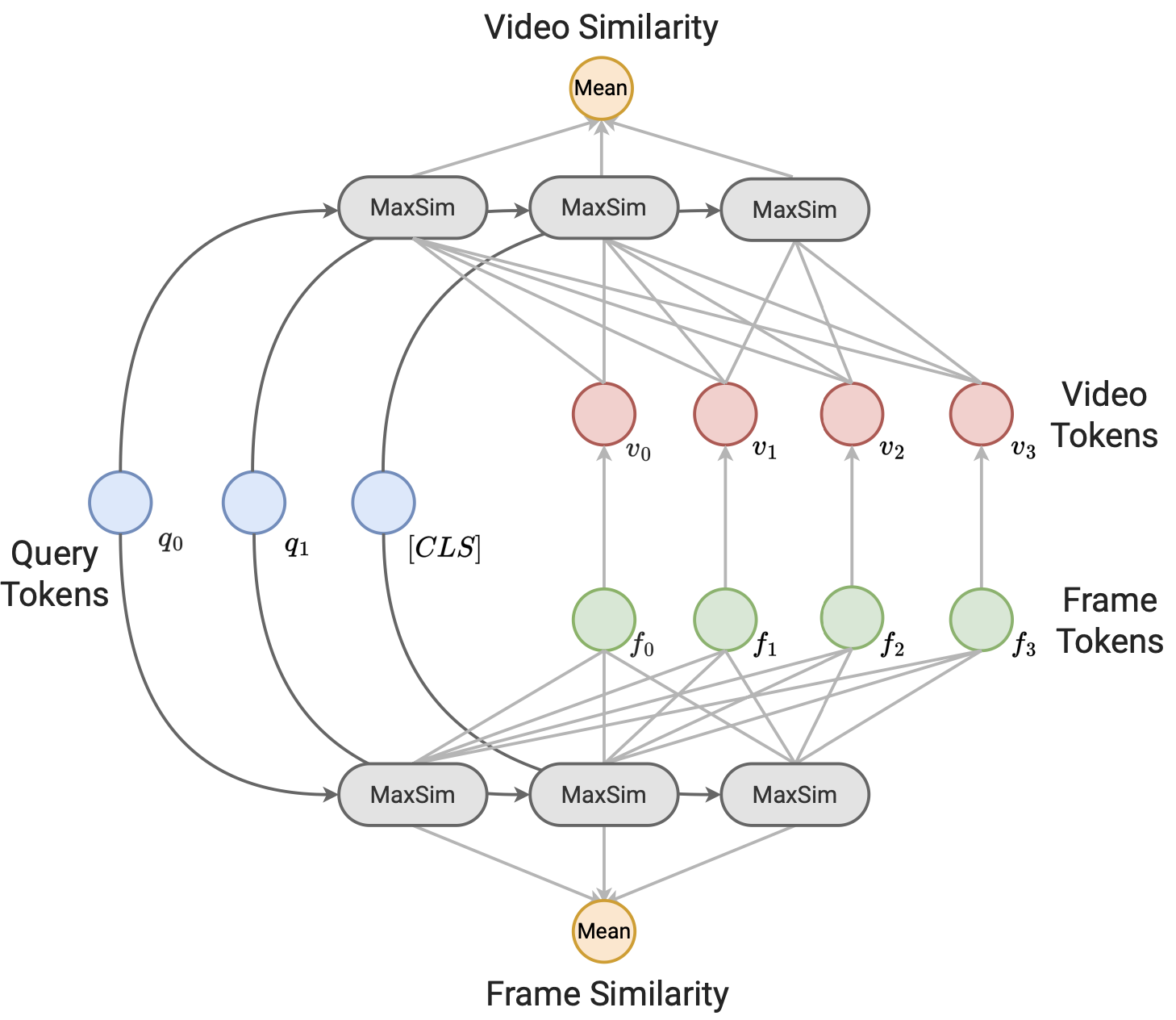

- Video Retrieval in multilingual real-world settings with efficient retrieval models.

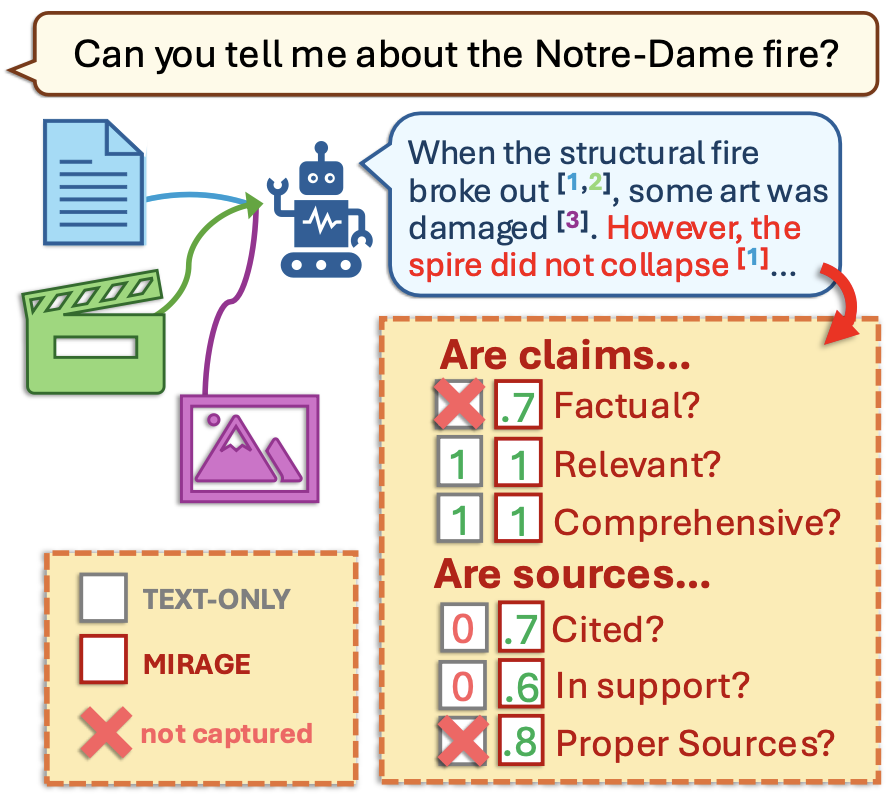

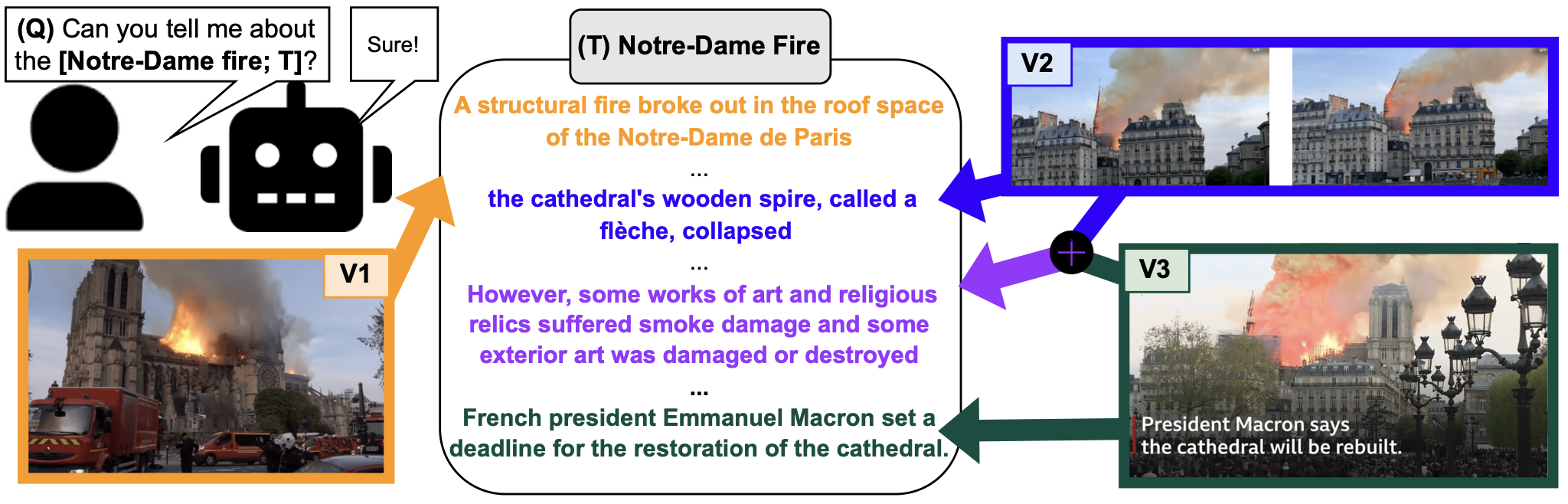

- Grounding Information in cross-document and video-text settings.

- Conditional text generation from multiple videos, documents, and across documents.

My keywords are: video understanding, multimodal RAG (retrieval-augmented generation), multimodal generation, grounded generation.

Before Johns Hopkins, I got my B.S. from the University of Rochester advised by Dr. Jiebo Luo and Dr. Aaron Steven White.

[Resume]

news

| Feb 26, 2025 | 2/2 for papers at CVPR 2025! |

|---|---|

| Aug 26, 2024 | Starting Ph.D. at JHU |